It wasn’t long ago when organizations cited several concerns and excuses to avoid putting their production workloads in containers. Things have changed, to say the least. With Docker, container technology has gained high acceptance, and users now download millions of container images daily.

Docker containers offer an efficient and convenient way to ship software reliably, without posing the traditional challenges developers encountered during the movement of software from production to the live environment. As all configuration files, libraries, and dependencies required to run the application are clubbed together with the application in a container, it becomes easy to ship the software without any worries.

Despite all its positives, Docker isn’t the silver bullet for everything that can go wrong with an application. When an issue arises, developers or DevOps professionals need access to logs for troubleshooting. This is where things get a little tricky. Logging in Docker isn’t the same as logging elsewhere. In this article, we’ll discuss what makes logging in Docker different, along with the best practices for Docker logging:

Challenges With Docker Logging

Unlike traditional application logging, there are several methods for managing application logs in Docker. Organizations can use data volumes to store logs as the directory can hold data even when a container fails or shuts down. Alternatively, there are several logging drivers available, which after minor configuration, can allow teams to forward their log events to a syslog running on their host. For first time users, identifying which of these methods would suit their requirement isn’t always straightforward.

One had to consider the limitations of every method. For instance, when using logging drivers, one can face challenges in log parsing. Inspecting the log files with “docker logs” command isn’t possible in every case, as it works only with json-file logging driver. Further, Docker logging drivers don’t support multi-line logs.

Moreover, complexity increases while managing and analyzing a large number of container logs from Docker Swarm. Very often, containers start multiple processes, and the containerized applications start generating a mix of log streams containing plain text messages, unstructured logs, and structured logs in different formats. In such cases, parsing of logs becomes challenging, as it isn’t simple to map every log event with the container or app producing it.

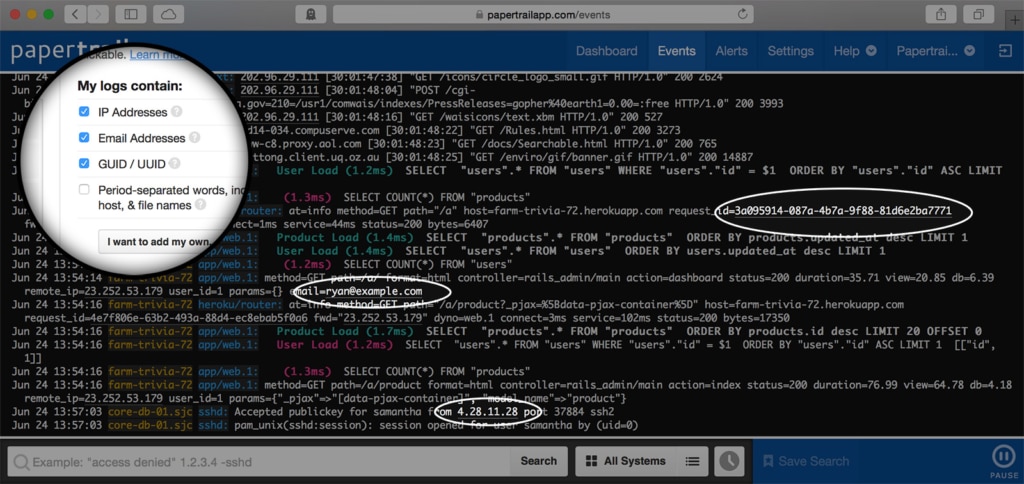

Creating centralized log management and analytics setup or using a cloud-based solution like SolarWinds® Papertrail™ can help in solving the above challenges. Papertrail simplifies log management with a quick setup and support for all common logging frameworks for log ingestion. It parses your logs and streamlines troubleshooting with simple search and filtering. You can tail logs and view real-time events in its event viewer, which provides a clean view of events in infinite scroll with options to pause the feed or skip to specific time frames. Check out the plans or get a free trial of Papertrail here.

Given below some tips and best practices for logging in Docker.

Best Practices for Docker Logging

- Centralized Log Management

There was a time when an IT administrator could SSH into different servers and analyze their logs using simple grep and awk commands. While the commands still function as before, due to the complexity of modern microservices and container-based architectures, traditional methods for log analysis aren’t sustainable anymore. With several containers producing a large volume of logs, log aggregation and analysis become highly challenging.

This is where cloud-based centralized log management tools help in efficient and effective analysis of such logs. Moreover, one can also use the same tools to manage infrastructure logs (containerized infrastructure services, Docker Engine, etc.). With both application and infrastructure logs in one place, teams can easily monitor their entire ecosystem, correlate data, find anomalies and troubleshoot issues faster.

It’s not an easy task to monitor an endless stream of logs and find relevant information for the resolution of issues. To make things simple while collecting logs from a large number of containers, organizations can tag their logs using the first 12 characters of the container ID. The tags could be customized with different container attributes to simplify the search.

- Security and Reliability

With modern log analysis tools, it’s easier to run full-text searches over a large volume of log data and get quick results. However, application logs can contain a lot of sensitive data, which shouldn’t fall into the wrong hands. Messages sent via syslog connection should be encrypted to avoid this from happening.

While using a syslog driver with TCP or TLS is a reliable method for the delivery of logs, temporary network issues or high network latency can interrupt real-time monitoring. It’s seen often when the syslog server is unreachable, Docker Syslog driver blocks the deployment of containers and also loses logs. To avoid this, teams can install the syslog server on the host. Alternatively, they can also use a dedicated syslog container, which can send the logs to a remote server.

- Real-Time Response

For real-time monitoring, teams can use docker logs command’s –follow option. The feature is similar to the conventional -tail command, and helps in viewing log files in production environments to identify issues proactively. Log management tools like SolarWinds Loggly® and Papertrail can further simplify real-time monitoring from multiple sources, with unified dashboards giving a quick overview of the environment. Further integrating the log management solution with notification tools like Slack, PagerDuty, Victorops, etc, is also crucial. With notifications, IT administrators can configure intelligent alerts to stay on top of their Docker application logs.

Top Tools for Docker Log Management

Open Source Tools: Organizations can develop robust log monitoring and analytics set up using various open-source tools. These tools may pose some configuration challenges, but strong community support can help address such issues. For instance, they can consider Telegraf / syslog + docker syslog driver for log collection, Influx DB for storage, and Grafana and Chronograf to create a user interface. Also, there are several guides available to use ELK stack (Elastic Search, Logstash, and Kibana) for Docker monitoring.

Commercial Tools: While an open-source tool for log management and analysis may appear to be a lucrative option, it can take up a lot of time and effort to set up a Docker log viewer. This is where commercial tools often have an advantage, as they come with dedicated support. Tools like Dynatrace, Papertrail, Loggly, Logentries, Sentry also offer several advanced features to simplify troubleshooting. Further, most of these tools offer a free evaluation period.